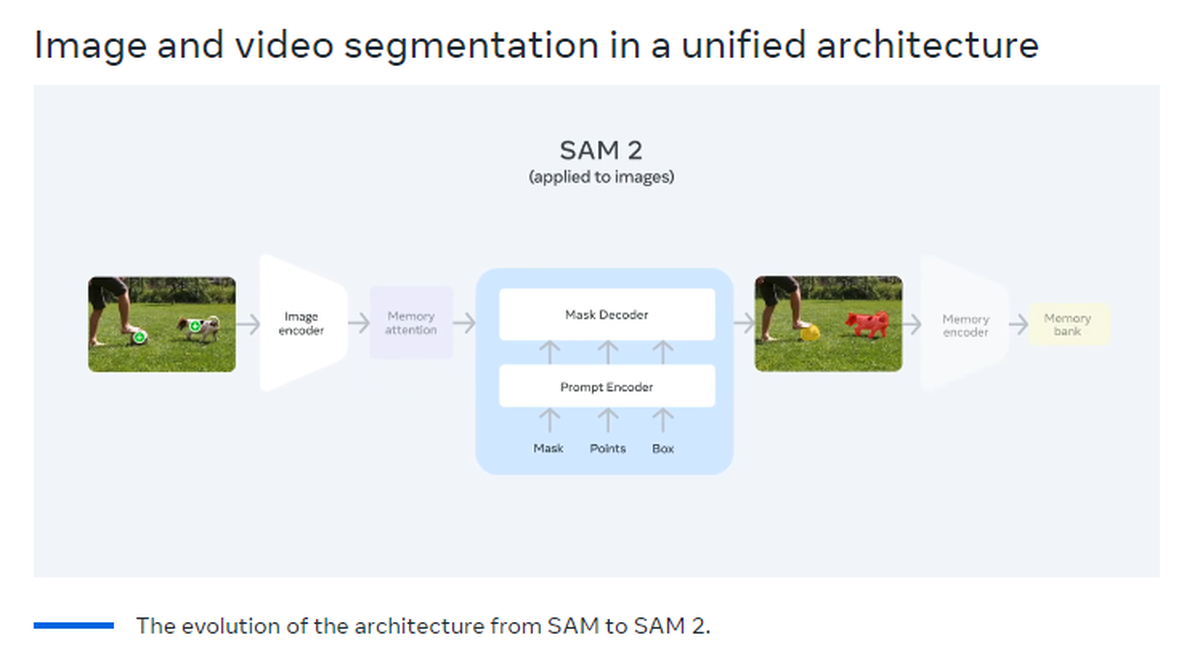

Demo samples show how SAM 2 tracks and isolates video elements

| Photo Credit: Screenshots sourced from Meta and compiled on Canva

Meta has introduced a new AI model called Segment Anything Model 2, or SAM 2, which it says can tell which pixels belong to a certain object in videos.

The Facebook-parent’s previously released Segment Anything Model from last year helped in the development of features in Instagram, such as ‘Backdrop’ and ‘Cutouts.’ SAM 2 is meant for video media, with Meta claiming that SAM 2 could “segment any object in an image or video, and consistently follow it across all frames of a video in real-time.”

Apart from social media and mixed reality use cases, however, Meta explained that its older segmentation model was used in oceanic research as well as disaster relief, apart from cancer screening.

(Unravel the complexities of our digital world on The Interface podcast, where business leaders and scientists share insights that shape tomorrow’s innovation. The Interface is also available on YouTube, Apple Podcasts and Spotify.)

How segmentation works in Meta’s SAM 2

| Photo Credit:

Meta

“SAM 2 could also be used to track a target object in a video to aid in faster annotation of visual data for training computer vision systems, including the ones used in autonomous vehicles. It could also enable creative ways of selecting and interacting with objects in real-time or in live videos,” said Meta in a blog post.

The social media company invited users to try out the model, which is being released under a “permissive” Apache 2.0 license.

Meta CEO Mark Zuckerberg discussed the new model with Nvidia CEO Jensen Huang, hailing its scientific applications, reported TechCrunch.